Sound to Colour

With a saturation system in place, we now need a way to feed it data from the audio of the game. The observable saturation in the area should be proportional to the volume of an AudioSource (Docs) (object making noise) as observed from the position of the AudioListener (Docs) (player).

2D Sound vs 3D Spatial Sound

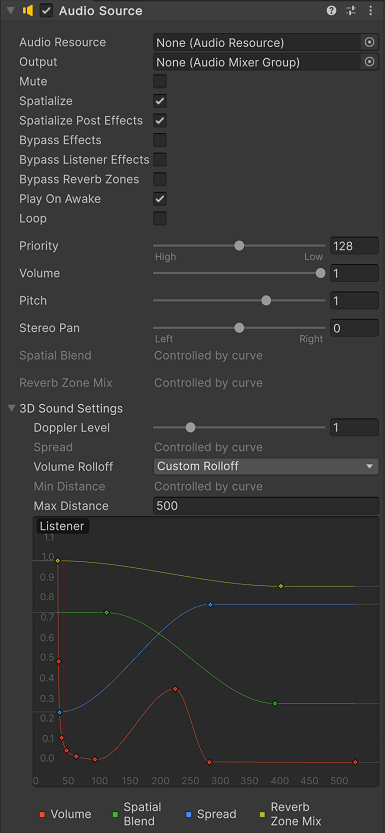

Unity has 3D spacial sound built into the AudioSource component. Moving the Spatial Blend slider changes the amount the sound pipped through the 2D (source at listener position) and 3D (source at source position). In this instance we want the spacial balance shifted completely to 3D, attenuation based upon distance from the source. This paired with min/max distances can be used to control the volume level in line with the objective.

Spectrum Data

After trying several different methods to get the volume of an AudioSource at the players position, I changed direction and had a look at the spectrum data it exposes via (API):

public void GetSpectrumData(float[] samples, int channel, FFTWindow window);

Using the spectrum data you can quickly ascertain the intensity and we can use that to set the current range of the saturation shader saturation. This leads to a nice pulsing effect to the rhythm of the music or sound.

Smooth transitions

The transition between high/low attenuation signals was a bit sharp, so I decided to add some configurable dampening Mathf.Smoothdamp (Unity Script API) to the script to make the transitions more gradual.

Final Code

Main Class

/// <summary>

/// Saturation provider range based on the Spectrum data of an attached AudioSource

/// </summary>

public class SpectrumAudioSaturation : AudioSaturationProviderBase {

/// <summary>

/// Fast Fourier transform window.

/// </summary>

public FFTWindow AnalysisWindow = FFTWindow.Rectangular;

/// <summary>

/// Distance the area should span.

/// </summary>

public float MaxDistance = 10f;

/// <summary>

/// Cached array of spectrum data.

/// </summary>

public float[] Spectrum = new float[256];

/// <summary>

/// Length of time to smooth the value of <see cref="SaturationProvider.Range" />

/// </summary>

public float SpectrumSmoothTime = 0.1f;

/// <summary>

/// Number of spectrum samples to take.

/// </summary>

[SerializeField, HideInInspector]

private int _samples = 128;

/// <summary>

/// Current velocity of trhe smoothing function

/// </summary>

private float _spectrumSmoothVelocity;

/// <summary>

/// Number of spectrum samples to take.

/// </summary>

[ShowInInspector]

public int Samples {

get { return _samples; }

set {

if (value == _samples) {

return;

}

// Ensure we reset all dependant data

_samples = Mathf.ClosestPowerOfTwo(value);

Spectrum = new float[_samples];

}

}

/// <summary>

/// Ensure starting conditions

/// </summary>

protected override void Start() {

base.Start();

SaturationProvider.Enable();

}

/// <summary>

/// Update data every frame

/// </summary>

protected void Update() {

// Store spectrum data if the AudioSource is playing, zero out the data if not

if (Source.isPlaying && Enabled) {

if (Source.clip != null) {

Source.GetSpectrumData(Spectrum, 0, AnalysisWindow);

}

}

else {

Spectrum = new float[_samples];

}

// Smooth transition the SaturationProvider.Range

SaturationProvider.Range = Mathf.SmoothDamp(SaturationProvider.Range, MaxDistance * Spectrum.Sum() * 2, ref _spectrumSmoothVelocity, SpectrumSmoothTime);

}

/// <summary>

/// Pre/Re populate the <see cref="SaturationProvider" /> when added/reset

/// </summary>

private void Reset() {

SaturationProvider = GetComponent<SaturationProvider>();

}

}

Base Component

/// <summary>

/// Base component for a SaturationProvider based on an AudioSource

/// </summary>

[RequireComponent(typeof(AudioSource), typeof(SaturationProvider)), HideMonoScript]

public abstract class AudioSaturationProviderBase : MonoBehaviour {

/// <summary>

/// Should the component change saturation

/// </summary>

[ReadOnly, SerializeField]

protected bool Enabled = true;

/// <summary>

/// Attached AudioSource

/// </summary>

protected AudioSource Source;

/// <summary>

/// Attached SaturationProvider

/// </summary>

[HideInInspector, SerializeField]

private SaturationProvider _saturationProvider;

/// <summary>

/// Gets and Sets the SaturationProvider

/// </summary>

[ShowInInspector, PropertyOrder(-10)]

protected SaturationProvider SaturationProvider { get { return _saturationProvider; } set { _saturationProvider = value; } }

/// <summary>

/// Set up components

/// </summary>

protected virtual void Start() {

// allow off-gameobject provider to be specified

if (_saturationProvider == null) {

SaturationProvider = GetComponent<SaturationProvider>();

}

Source = GetComponent<AudioSource>();

}

}

Conclusion

Coming up with the concept was simple, but figuring out the solution was quite complex and very time consuming. In the end I couldnt find a way to extract information (that should exist) from the engine, and had to settle for a lesser solution. However, the result looks very nice.

Future

I'd like to develop the SaturationProvider and shader to vary the saturation radially based on the volume delta. Saturation would pulse out of the audio source like ripples on a pond, the higher the volume the higher the saturation radiating out. The the volume $v$ at distance $d$ could be the picked from an historical data array $h$ containing the last few seconds of volume logs. With a sample count per second $s$, an array can be constructed as below:

$$\text{array } h = [v^0, v^1, ... v^n]\text{ where } n= {\frac{maxRange } {speedOfSound}* samplesPerSecond}$$

A volume $v$ could then be picked from the array using is $d$ using

$v = h[i] \text{ where } i = \lfloor{}(maxRange / d) * n\rfloor{}$